Securing inter-service communication in a microservice architecture

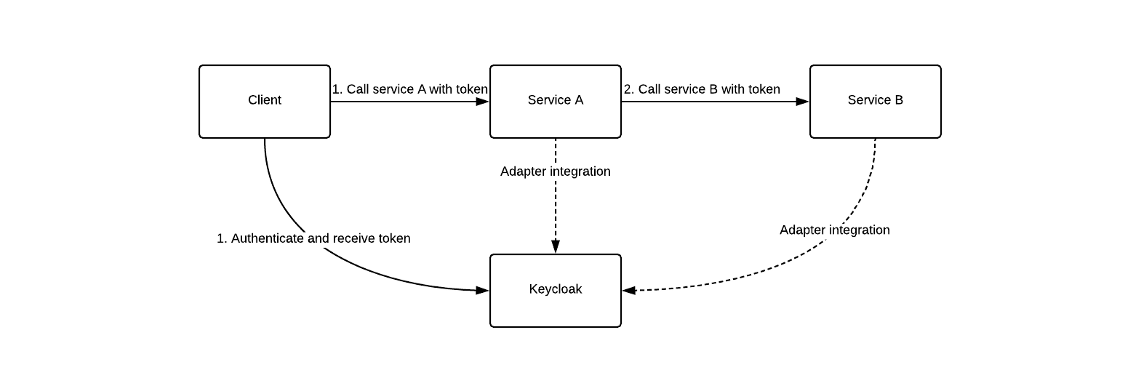

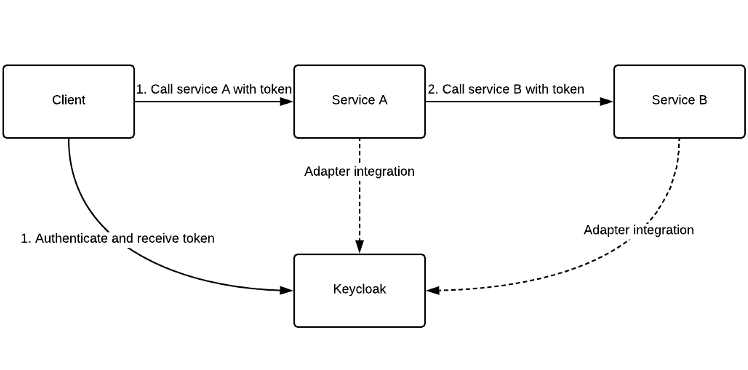

There are a couple of ways of securing inter-service communication in a microservice architecture. Adopting the authentication proxy pattern, or pass the jwt as the services invoke one another; no matter what you pick, each service needs to have the layer of security addressed.

In this article I will go over some puzzles and solutions that I found while exploring the second approach. I used Spring framework, for creating the services, and Keycloak as an authentication server.

Playing around with Keycloak is super easy, just head over to the download page and double click on the bin/standalone executable, which will start the server backed by an in memory database.

What we need out of Keycloak:

2 bearer-only clients - This open id client flavor is used for services. If enabled the adapter will not attempt to authenticate users, but only verify bearer tokens. Adapter is Keycloak lingo for provided library that you can use to easily integrate your application with the server.

1 public client - we will use this in order to authenticate a user. We can then invoke an endpoint in Keycloak and if the authentication process went smoothly, we will get back a token that we can then use as an Authentication header for calling the services.

a user - just to have someone to actually use the services :)

Integrating a service with Keycloak is straightforward and if you use spring they have an adapter for that. Just add it as a dependency in your project and in set the connection details to the authentication server and some security rules for your endpoints.

| keycloak.auth-server-url=<AUTH_SERVER_URL>/auth | |

| keycloak.realm=<REALM_NAME> | |

| keycloak.resource=<CLIENT_ID> | |

| keycloak.bearer-only=true | |

| keycloak.securityConstraints[0].authRoles[0]=uma_authorization | |

| keycloak.securityConstraints[0].securityCollections[0].name=<NAME> | |

| keycloak.securityConstraints[0].securityCollections[0].patterns[0]=/* | |

| keycloak.securityConstraints[0].securityCollections[0].methods[0]=GET | |

| keycloak.securityConstraints[0].securityCollections[0].methods[1]=POST | |

| keycloak.securityConstraints[0].securityCollections[0].methods[2]=PUT | |

| keycloak.securityConstraints[0].securityCollections[0].methods[3]=DELETE |

This will enforce the call on every service endpoint to be authenticated via a token. Cool!

The anatomy of service A is super simple, a controller that injects a spring bean:

| @Autowired | |

| MyService myService; | |

| @GetMapping | |

| public ResponseEntity get() { | |

| logger.info("User: {}", accessToken.getSubject()); | |

| String status = myService.callB(); | |

| return status.equals("ok") ? | |

| ResponseEntity.ok().build() : | |

| ResponseEntity.badRequest().build(); | |

| } |

— which in turn is used to call service B

| @Autowired | |

| TokenString tokenString; | |

| public String callB() { | |

| RestTemplate restTemplate = new RestTemplate(); | |

| HttpHeaders headers = new HttpHeaders(); | |

| headers.set("Authorization", "bearer " + tokenString.getValue()); | |

| HttpEntity entity = new HttpEntity(headers); | |

| ResponseEntity re = restTemplate.exchange(<SERVICE_B_ADDRESS>, HttpMethod.GET, entity, String.class); | |

| return re.getStatusCode().equals(HttpStatus.OK) ? "ok" : "not ok"; | |

| } |

Not necessarily rocket science. The interesting part is how we get to the token string:

| @Bean | |

| @Scope(scopeName = WebApplicationContext.SCOPE_REQUEST, | |

| proxyMode = ScopedProxyMode.TARGET_CLASS) | |

| public TokenString tokenString() { | |

| HttpServletRequest request = ((ServletRequestAttributes) | |

| RequestContextHolder | |

| .currentRequestAttributes()) | |

| .getRequest(); | |

| String value = request.getHeader("Authorization").split(" ")[1]; | |

| return new TokenString(value); | |

| } |

while the token string is just a simple class that I used to make the code more expressive

| public class TokenString { | |

| private final String value; | |

| public TokenString (String value) { | |

| this.value = value; | |

| } | |

| public String getValue() { | |

| return this.value; | |

| } | |

| } |

In modern deployment environments, such as the cloud, the property of elasticity is super important. Elasticity is pretty much the degree to which a system is able to adapt to workload changes by provisioning and de-provisioning resources in an autonomic manner, such that at each point in time the available resources match the current demand as closely as possible.

In order to achieve said property the services need to be coded having a very important thing in mind — state; or better yet lack of state. Have a look at a comparison between the two here.

Coming back to our dumb service, it wouldn’t have made any sense to have the authorization token as a session or a singleton scoped bean. The request scope was the logical choice in this case. However, this has a very interesting hidden effect: the bean instance can only be accessed throughout the life of a request. We need to dissect this request concept as there is something else that is hidden here, and in order to uncover what that thing is we need to take a closer look at the anatomy of servlet http request.

During a normal servlet request processing a thread is allocated for handling that particular request. Spring uses the dispatcher servlet which is the mechanism through which the framework matches an incoming request URI with the appropriate handlers, either web/ui or api. It also provides mapping and exception handling utilities. When that response is generated, the thread is released and it can then serve another request. A request scoped bean will carry out its life in the ThreadLocal space, meaning that it cannot be accessed concurrently by other threads.

In a micro-service architecture, where some services rely on the results of one or more other services, this model of handling requests becomes inefficient. The reason is that the thread processing the request will just sit idly until all downstream requests have been completed and the response can be generated. This leads to the CPU being underutilized. And so a solution had to be found, and that solution is non blocking I/O. What this allows developers to do is write endpoints that return a CompletableFuture:

| @GetMapping | |

| public CompletableFuture<ResponseEntity> get() { | |

| return supplyAsync(() -> ResponseEntity.ok().build()) | |

| } |

Not the most eloquent example I know, but bear with me for a while. In our use case we have service A calling service B. This is an operation that fits nicely under the new paradigm; we can send out the request to service B and at the same time free the underlying thread processing the request so that it can be used by other requests. We can re-imagine the bean sending out the request like this:

| @Autowired | |

| TokenString tokenString; | |

| public CompletableFuture<String> callBAsync() { | |

| return supplyAsync(() -> { | |

| logger.info("calling B"); | |

| RestTemplate restTemplate = new RestTemplate(); | |

| HttpHeaders headers = new HttpHeaders(); | |

| headers.set("Authorization", "bearer " + tokenString.getValue()); | |

| HttpEntity entity = new HttpEntity(headers); | |

| ResponseEntity re = restTemplate.exchange( | |

| <SERVICE_B_ADDRESS>, | |

| HttpMethod.GET, | |

| entity, | |

| String.class); | |

| return re.getStatusCode().equals(HttpStatus.OK) ? | |

| "ok" : "not ok"; | |

| }); | |

| } |

and the controller method:

| @GetMapping | |

| public CompletableFuture<ResponseEntity> get() { | |

| logger.info("GET request"); | |

| return myService.callBAsync().thenApply(status -> | |

| status.equals("ok") ? | |

| ResponseEntity.ok().build() : | |

| ResponseEntity.badRequest().build()); | |

| } |

Looking at the log output:

[http-nio-8082-exec-3] GET request [ForkJoinPool.commonPool-worker-1] calling B

We can see that the responsibility of calling service B has been moved from the request thread to a different thread from the fork join pool of worker threads. And this is where the scope name requestgenerates dissonance. From a http client point of view there is just a single http request being executed, however, the request scope is bound to the request processing thread. Executed, the above code would yield an exception:

org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'scopedTarget.tokenString': Scope 'request' is not active for the current thread; consider defining a scoped proxy for this bean if you intend to refer to it from a singleton; nested exception is java.lang.IllegalStateException:No thread-bound request found: Are you referring to request attributes outside of an actual web request,or processing a request outside of the originally receiving thread? If you are actually operating within a web request and still receive this message, your code is probably running outside of DispatcherServlet/DispatcherPortlet: In this case, use RequestContextListener or RequestContextFilter to expose the current request.

This is thrown in the KeycloakConfig class when trying to generate the TokenString by accessing the request attributes. In order to bypass this we need to turn on a flag in the DispatcherServlet:

| @Configuration | |

| public class ServletConfig { | |

| @Bean | |

| public DispatcherServlet dispatcherServlet() { | |

| DispatcherServlet servlet = new DispatcherServlet(); | |

| servlet.setThreadContextInheritable(true); | |

| return servlet; | |

| } | |

| } |

By setting the thread context inheritable property to true, we will tell spring to make the request attributes inheritable by child threads.

The second step is to define a custom scope for our bean:

| public class InheritedThreadScope implements Scope { | |

| private static final Logger logger = LoggerFactory.getLogger(InheritedThreadScope.class); | |

| private final ThreadLocal<Map<String, Object>> threadScope = | |

| new InheritableThreadLocal() { | |

| @Override | |

| protected Map<String, Object> initialValue() { | |

| return new HashMap<>(); | |

| } | |

| }; | |

| public Object get(String name, ObjectFactory<?> objectFactory) { | |

| Map<String, Object> scope = this.threadScope.get(); | |

| Object object = scope.get(name); | |

| if (object == null) { | |

| object = objectFactory.getObject(); | |

| scope.put(name, object); | |

| } | |

| return object; | |

| } | |

| public Object remove(String name) { | |

| Map<String, Object> scope = this.threadScope.get(); | |

| return scope.remove(name); | |

| } | |

| public void registerDestructionCallback(String name, Runnable callback) { | |

| logger.warn("InheritedThreadScope does not support destruction callbacks. " + | |

| "Consider using RequestScope in a web environment."); | |

| } |

register it with spring in a config class

| @Bean | |

| public CustomScopeConfigurer customScopeConfigurer() { | |

| CustomScopeConfigurer configurer = new CustomScopeConfigurer(); | |

| Map<String, Object> scopes = new HashMap<>(); | |

| scopes.put(THREAD_INHERITED, new InheritedThreadScope()); | |

| configurer.setScopes(scopes); | |

| return configurer; | |

| } |

and redefine the scope of the TokenString bean:

| @Bean | |

| @Scope(scopeName = ScopeConfig.THREAD_INHERITED, | |

| proxyMode = ScopedProxyMode.TARGET_CLASS) | |

| public TokenString tokenString() { | |

| HttpServletRequest request = ((ServletRequestAttributes) | |

| RequestContextHolder | |

| .currentRequestAttributes()) | |

| .getRequest(); | |

| String value = request.getHeader("Authorization").split(" ")[1]; | |

| return new TokenString(value); | |

| } |

This will allow child threads to have access to the data defined in the parent thread.

Having in mind a company that uses a microservice architecture I see the concepts presented in this article as part of responsibilities of the devops team. This is actually the “dev” in devops. That team could offer archetypes that a developer that wants to build a new service could then use. It would be a huge productivity boost if the developers had everything from logging to security from the get go, rather than having to go through all the boilerplate of configuring everything.